Power bi embedded resource can be somewhat one of the most expensive resources you will run related to your reporting frontend. The current auto-scale that Microsoft provides, isn’t really real-time and may not be suitable, when trying to provide Power bi service when users really use reports.

Overview

I will show and explain how you can scale your Power bi up and down, how to suspend and then resume the capacity automatically.

Use cases to solve:

- We have report users only in business hours

- Seasonality, we know that Mondays, first week of the month and end of the month we have more data consumers

- When more than 10x users login-in to reports, we need to automatically scale up and when they leave scale down

- When semantic model is updated, we need bigger capacity, trigger when data is ready in your data source

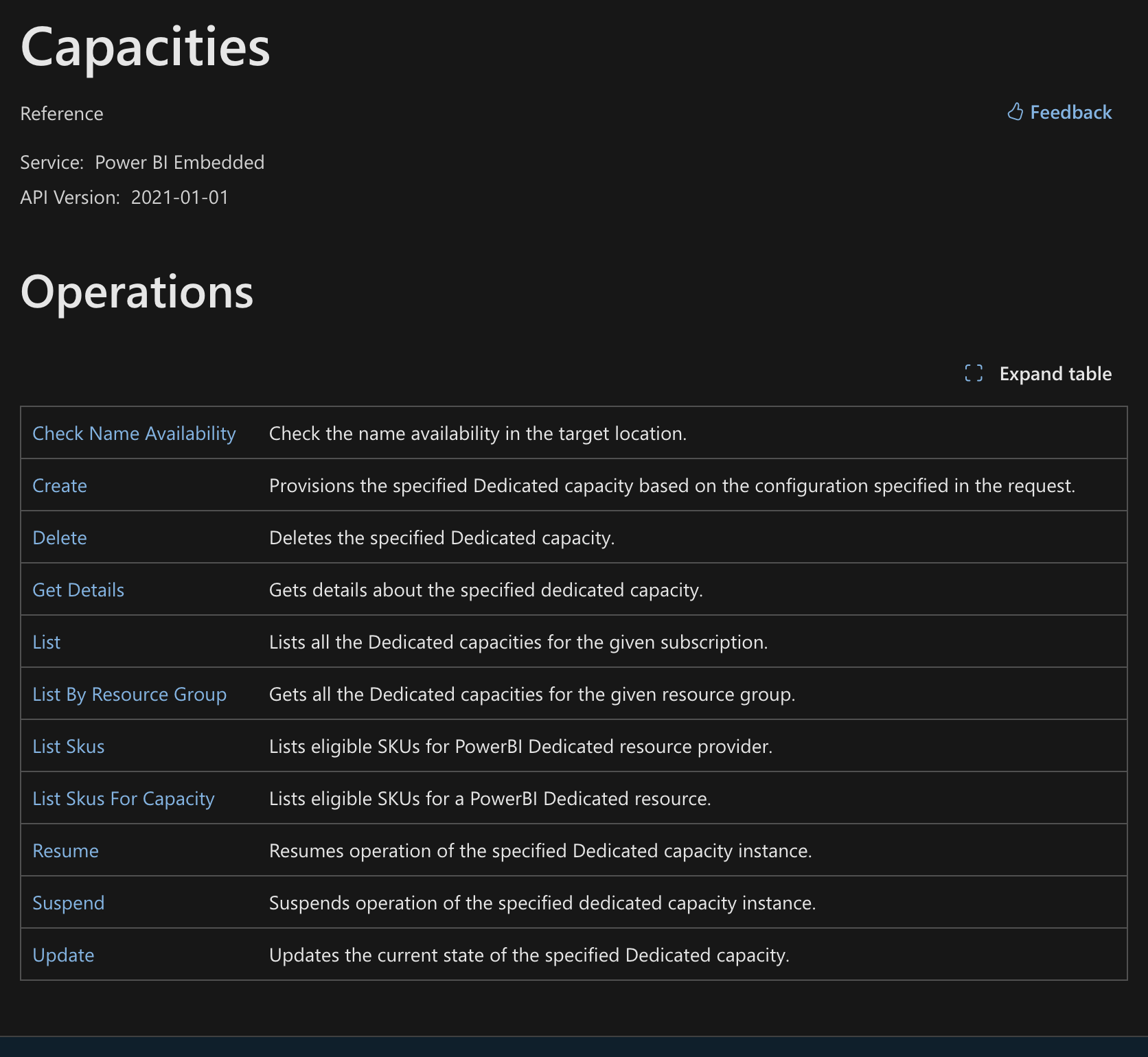

Power bi embedded resource capacities

Embedded resource in Azure itself is very simple, there are not many things you can do with it. Here is the list of operations that you can perform with:

In this blog we will look at Resume, Suspend and update.

Prerequisites:

Before proceeding, make sure you have the following:

To make changes to Azure resources, you need to have permissions. I would suggest to use a service principal for that, contributor role is more that enough for managing the resource. If you already have a service principal to create the resource itself, you can use that for this demo.

Service principal with access to the resource in Azure.

Getting started, Authentication

This example will use python to get things done.

Package that you will need:

from azure.identity import ClientSecretCredential

Tenantid, clientid and client secret. With these you will get the token, that can be used for making changes to the resource.

from azure.identity import ClientSecretCredential

client_secret = ""

client_id =""

tenant_id =""

credential = ClientSecretCredential(tenant_id, client_id, client_secret)

access_token = credential.get_token('https://management.azure.com/.default').token

Resume or suspend

Now that you have the token, lets suspend or resume the capacity:

For that you will need several things:

Subscription id:

resource group name:

Power bi embedded capacity name:

import requests

from azure.identity import ClientSecretCredential

subscription_id = ""

resource_group = ""

dedicatedCapacityName = ""

status = "suspend" or "resume" # There can be only one value, it is either suspend or resume

url = f"https://management.azure.com/subscriptions/{subscription_id}/resourceGroups/{resource_group}/providers/Microsoft.PowerBIDedicated/capacities/{dedicatedCapacityName}/{status}?api-version=2021-01-01"

payload = ""

headers = {

"Authorization": f"Bearer {access_token}", # Token

"Content-Type": "application/json"

}

response = requests.post(url, headers=headers, data=payload)

print(response.status_code)

This way you can either suspend or resume your Power bi embedded capacity.

Change capacity sku

When there is need to change Power bi capacity sku, this is how you can do that.

You will need these:

Capacity sku:

Subscription id:

resource group name:

Power bi embedded capacity name:

This is a PATCH function, that will update the capacity.

import requests

from azure.identity import ClientSecretCredential

sku = "A7" # example

subscription_id = ""

resource_group = ""

capacity_name = ""

payload = {

"sku": {

"name": sku

}

}

url = f"https://management.azure.com/subscriptions/{subscription_id}/resourceGroups/{resource_group}/providers/Microsoft.PowerBIDedicated/capacities/{capacity_name}?api-version=2017-10-01"

credential = ClientSecretCredential(tenant_id, client_id, client_secret)

access_token = credential.get_token('https://management.azure.com/.default').token

headers = {

"Authorization": f"Bearer {access_token}",

"Content-Type": "application/json"

}

response = requests.patch(url, headers=headers, data=json.dumps(payload))

print(response.text)

Checking the metrics of Power bi embedded

There is a way to scale your power bi embedded automatically when CPU hits some level. There are several ways to do that, one is function app that will be triggered when CPU goes to a threshold and another one is checking every 15 that is the situation. With the trigger there is a 5 minute delay, when the function app is triggered. Here is an example how to get power bi CPU from azure metrics.

NB! Metrics needs to be enabled for the resource.

from azure.identity import ClientSecretCredential

from datetime import datetime, timedelta

##

Getting credentials

##

resource_id = '/subscriptions/{}/resourceGroups/{}/providers/Microsoft.PowerBIDedicated/capacities/{}'.format(

subscription_id,

resource_group,

capacity_name

)

resource_url = resource_id

start_time = datetime.utcnow() - timedelta(hours=1) # this will get 1 hour metrics

end_time = datetime.utcnow()

metric_data = client.metrics.list(

resource_uri=resource_id,

timespan="{}/{}".format(start_time.isoformat(), end_time.isoformat()),

interval='PT1M', # Example: retrieve data every 1 minute

metricnames='cpu_metric',

aggregation='Average'

)

Checking the current sku

Before you scale your sku up or down you need to check the sku, this is very simple

from azure.mgmt.powerbidedicated import PowerBIDedicated

capacity_name = ""

resource_group = ""

#credential, from previous scripts

client = PowerBIDedicated(credential, subscription_id)

resource = client.capacities.get_details(resource_group, capacity_name)

sku = resource.sku

print(sku.name)

Combining everything to make a change

- Get the metrics

- Sku

- Change the sku

- Evaluate

The Evaluation is just a demo, when to do change and scale up or down. There is also a limitation. The example will go not go over A5, this means that the budget will not go over the maximum prices of A5 capacity and budget will always have the upper limit.

from azure.identity import ClientSecretCredential

from azure.mgmt.monitor import MonitorManagementClient

from azure.mgmt.powerbidedicated import PowerBIDedicated

from datetime import datetime, timedelta

import json

import requests

# Define your Azure subscription ID and resource group name

subscription_id = ''

resource_group = ''

capacity_name = ''

tenant_id = ""

client_id = ''

client_secret = ''

# Define the Azure resource ID for your Power BI Embedded instance

resource_id = '/subscriptions/{}/resourceGroups/{}/providers/Microsoft.PowerBIDedicated/capacities/{}'.format(

subscription_id,

resource_group,

capacity_name

)

credential = ClientSecretCredential(tenant_id, client_id, client_secret)

client = MonitorManagementClient(credential, subscription_id)

# Define the time range for which you want to retrieve metrics

start_time = datetime.utcnow() - timedelta(hours=1) # this will get 1 hour metrics

end_time = datetime.utcnow()

# Retrieve the metric data for CPU average

def get_metric_data():

metric_data = client.metrics.list(

resource_uri=resource_id,

timespan="{}/{}".format(start_time.isoformat(), end_time.isoformat()),

interval='PT1M', # Example: retrieve data every 1 minute

metricnames='cpu_metric',

aggregation='Average'

)

sum_average = 0

def get_metrics(metric_data):

# Print the metric values

for metric in metric_data.value:

print("Metric Name:", metric.name.localized_value)

for timeseries in metric.timeseries:

for data in timeseries.data:

print("Timestamp:", data.time_stamp)

print("Average CPU Percentage:", data.average)

# Update the sum variable

sum_average += data.average

# You can access other properties such as min, max, and total as well

print("---------")

# Print the sum of average values

print("Sum of Average CPU Percentage:", sum_average)

get_metric_data()

def get_pbi_sku():

#this will get the current PowerBIDedicated sku

client = PowerBIDedicated(credential, subscription_id)

resource = client.capacities.get_details(resource_group, capacity_name)

sku = resource.sku

return sku.name

print(get_pbi_sku())

def changecapacity(sku_size):

payload = {

"sku": {

"name": sku_size

}

}

print(payload)

url = f"https://management.azure.com/subscriptions/{subscription_id}/resourceGroups/{resource_group}/providers/Microsoft.PowerBIDedicated/capacities/{capacity_name}?api-version=2017-10-01"

access_token = credential.get_token('https://management.azure.com/.default').token

headers = {

"Authorization": f"Bearer {access_token}",

"Content-Type": "application/json"

}

response = requests.patch(url, headers=headers, data=json.dumps(payload))

print(response.status_code)

print(response.text)

def evaluate(sum_average):

if 5 <= sum_average <= 50 or get_pbi_sku() == "A5":

print(f'No need to scale {get_pbi_sku()}')

elif sum_average <= 5:

sku_size = "A1"

print(sku_size)

print(f'Scale down A{int(get_pbi_sku()[1]) - 1}')

else:

if sum_average >= 70:

sku_size_up = {int(get_pbi_sku()[1]) + 1}

sku_size = "A" + str(sku_size_up)

print(f'Start scaling up A{int(get_pbi_sku()[1]) + 1}')

#changecapacity(sku_size="A5")

if sum_average == 100 and sum_average >= 100 and get_pbi_sku() == "A5":

print(f'Maximum capacity has been reached for resource: ')

evaluate(sum_average)

Conclusion

If you have more than 1 Power bi embedded capacity, these examples will save you a lot of money, both from short and long term. There are many ways to combine these scripts and work with them, based on you business needs. For running this script. Considering that this can either be a cron or triggered based on a specific time function app can solve the problem. When you need to run the script only mornings and evenings, use logic app/ Power flow, the http request can be very easily converted into posts.